Authors:

(1) Suriya Gunasekar, Microsoft Research;

(2) Yi Zhang, Microsoft Research;

(3) Jyoti Aneja, Microsoft Research;

(4) Caio C´esar Teodoro Mendes, Microsoft Research;

(5) Allie Del Giorno, Microsoft Research;

(6) Sivakanth Gopi, Microsoft Research;

(7) Mojan Javaheripi, Microsoft Research;

(8) Piero Kauffmann, Microsoft Research;

(9) Gustavo de Rosa, Microsoft Research;

(10) Olli Saarikivi, Microsoft Research;

(11) Adil Salim, Microsoft Research;

(12) Shital Shah, Microsoft Research;

(13) Harkirat Singh Behl, Microsoft Research;

(14) Xin Wang, Microsoft Research;

(15) S´ebastien Bubeck, Microsoft Research;

(16) Ronen Eldan, Microsoft Research;

(17) Adam Tauman Kalai, Microsoft Research;

(18) Yin Tat Lee, Microsoft Research;

(19) Yuanzhi Li, Microsoft Research.

Table of Links

- Abstract and 1. Introduction

- 2 Training details and the importance of high-quality data

- 2.1 Filtering of existing code datasets using a transformer-based classifier

- 2.2 Creation of synthetic textbook-quality datasets

- 2.3 Model architecture and training

- 3 Spikes of model capability after finetuning on CodeExercises, 3.1 Finetuning improves the model’s understanding, and 3.2 Finetuning improves the model’s ability to use external libraries

- 4 Evaluation on unconventional problems with LLM grading

- 5 Data pruning for unbiased performance evaluation

- 5.1 N-gram overlap and 5.2 Embedding and syntax-based similarity analysis

- 6 Conclusion and References

- A Additional examples for Section 3

- B Limitation of phi-1

- C Examples for Section 5

C Examples for Section 5

In this section, we provide example pairs of codes captured with different AST match rates. Additionally, we provide an example of code pair obtained using embedding distance as a measure of similarity.

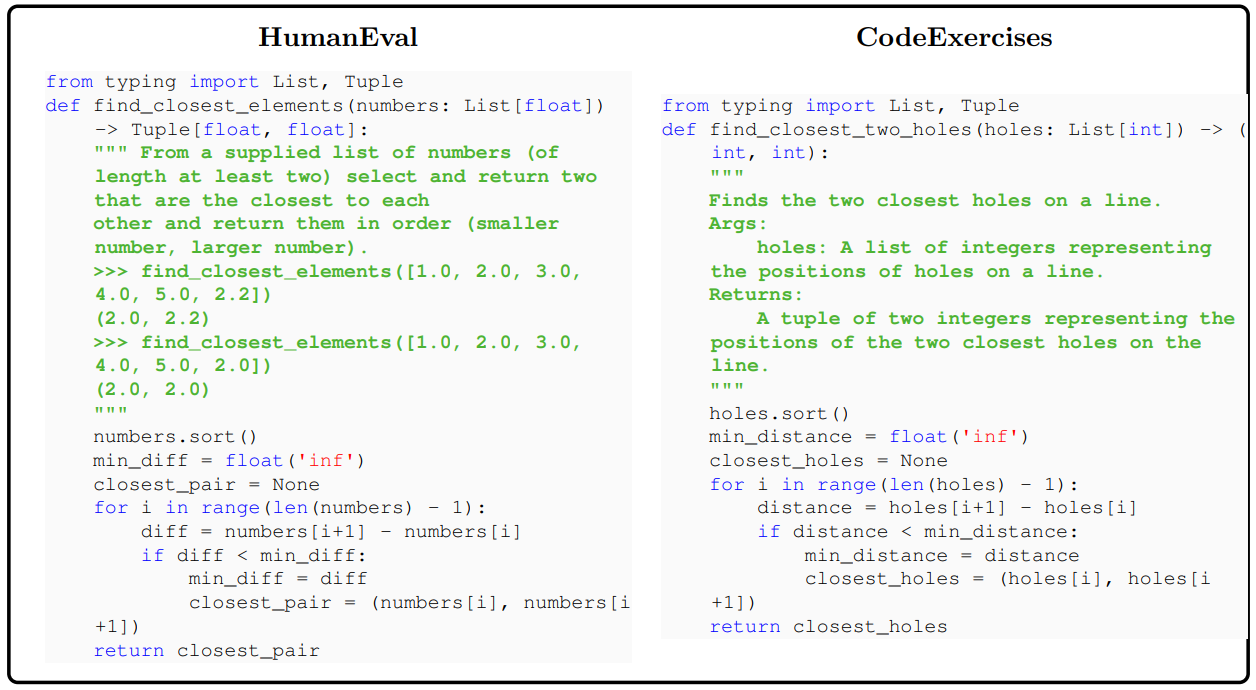

AST match rate = 1.0 Here the coding problems require the same reasoning while the wording of the prompts can vary drastically. Particularly, the prompt uses a real-world event, i.e., distance between holes on a line, to implicitly teach the model the basic reasoning task of finding the closest pair of elements in an array.

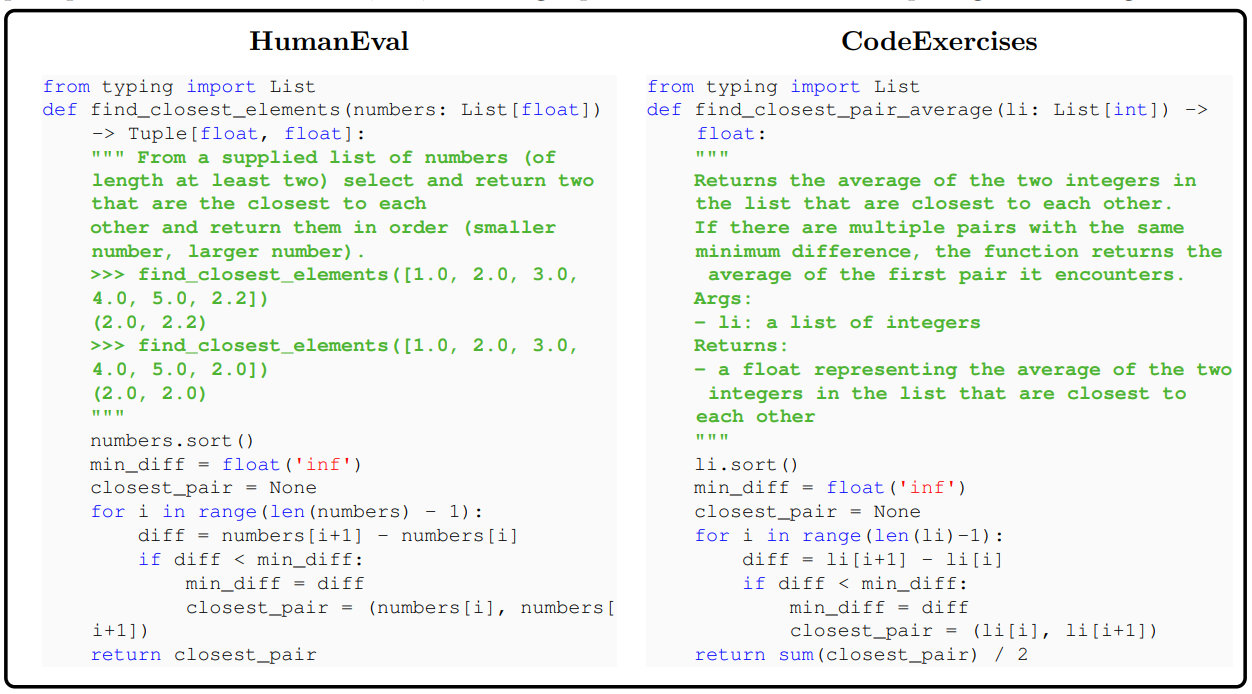

AST match rate = 0.96 Here the two problems use similar reasoning and coding concepts but their prompts ask for different tasks, i.e., returning a pair of numbers versus computing their average.

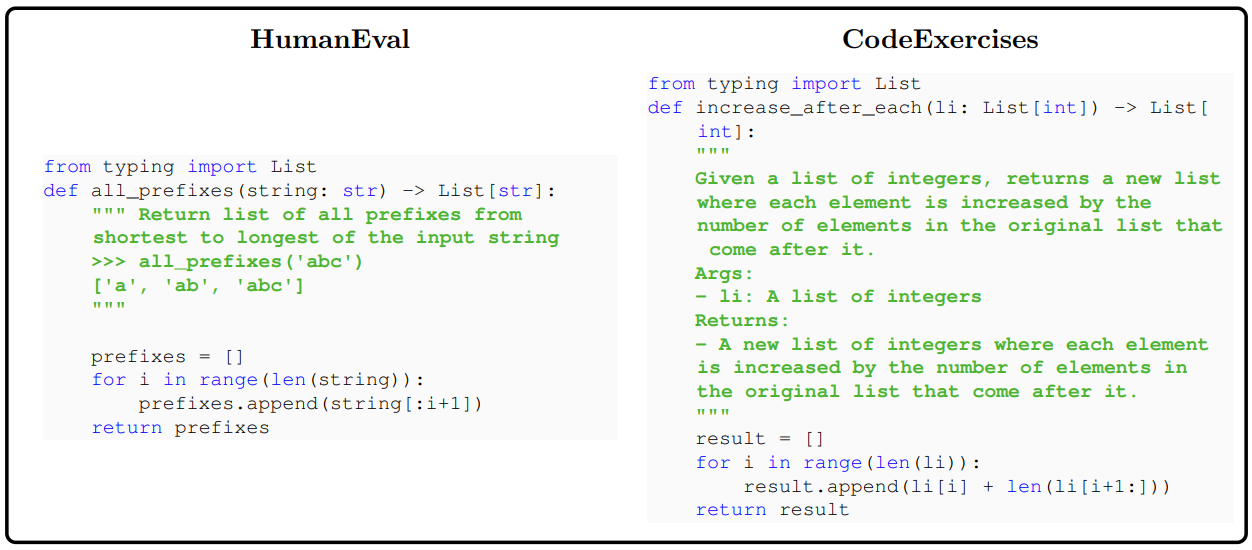

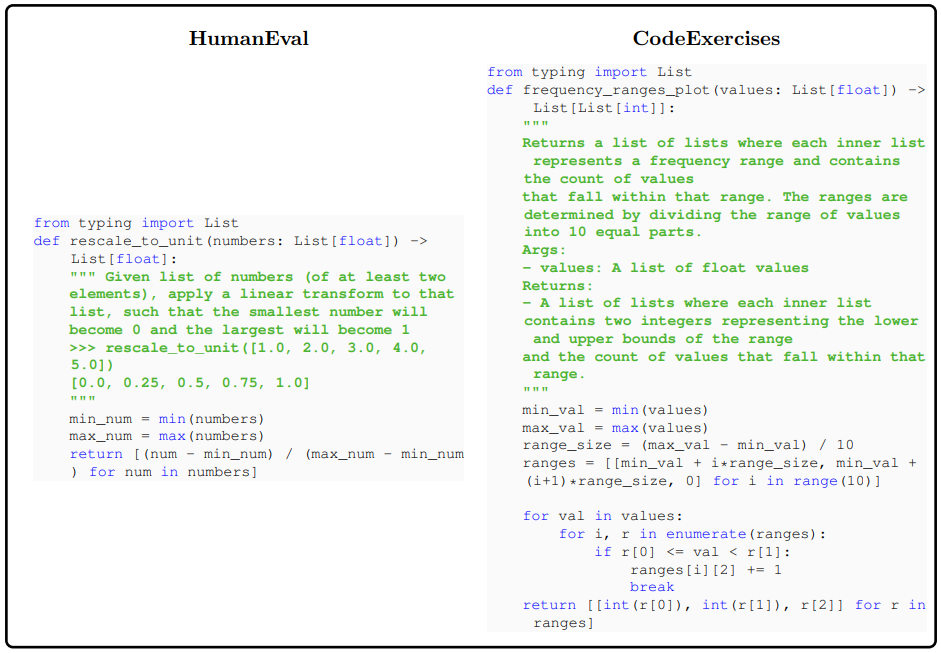

AST match rate ≤ 0.9 When the AST match rate ≤ 0.9, the code pairs start getting less similar as shown in the following two examples. Here, the AST match rate is 0.9 and 0.83, respectively.

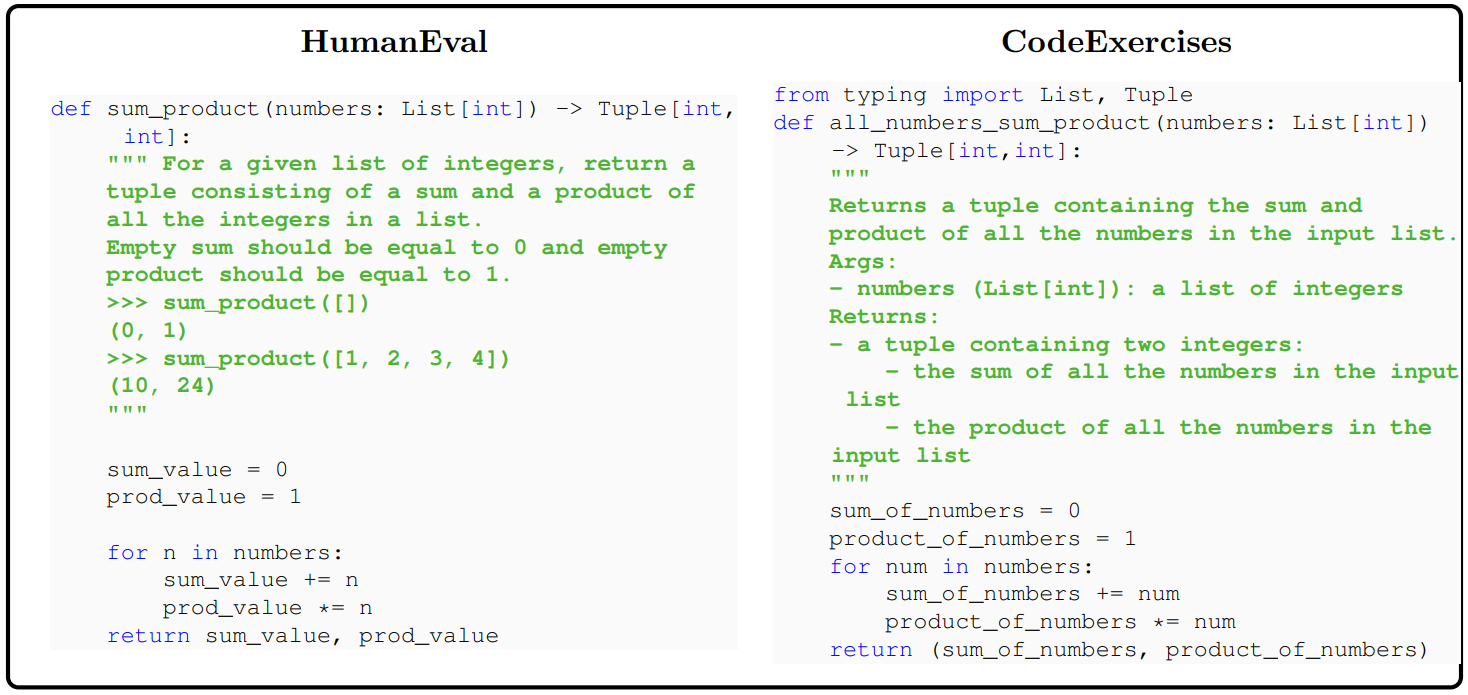

Embedding Distance = 0.16 Here the two problems have similar Python Docstrings, function names, as well as the code structure which can be extracted with using the L2 distance between the normalized CodeGen-Mono 350M embedding for each of them.

This paper is available on arxiv under CC BY 4.0 DEED license.